Building Data Pipelines for Product Intelligence

Nov 27, 2025

·

5

min read

In today's competitive digital landscape, understanding how users interact with your product isn't optional—it's essential. Yet many data teams struggle to transform scattered product data into meaningful insights that drive growth. The challenge? Product usage data sits isolated in analytics platforms, customer information lives in CRM systems, and operational metrics remain trapped in business applications. This fragmentation costs companies time, money, and opportunities.

Building robust data pipelines for product intelligence solves this problem by consolidating data from multiple sources into a unified system where it can be transformed, analyzed, and activated. This comprehensive guide walks through the entire process—from understanding what product intelligence is to implementing production-grade pipelines that deliver actionable insights at scale.

What is Product Intelligence?

Product intelligence is the process of using data-driven insights and analytics to gain a deeper understanding of how users interact with digital products. Unlike traditional business intelligence that focuses on historical business metrics and operational performance, product intelligence centers on behavioral data—the actions users take within your product, the features they engage with, and the journeys they follow from signup to retention.

For modern SaaS companies and digital product organizations, product intelligence has become the foundation of product-led growth strategies. It enables teams to answer critical questions: Which features drive engagement? Where do users experience friction? What behaviors predict churn? Which customer segments deliver the highest lifetime value?

The convergence of product analytics, customer data, and operational metrics creates a comprehensive view that powers data-driven product decisions. Key metrics tracked include daily/weekly/monthly active users, feature adoption rates, conversion funnel performance, user retention cohorts, session depth and frequency, and customer lifetime value predictions.

Why Product Intelligence Matters for Digital Businesses

Product intelligence transforms how companies build, iterate, and optimize their products. Instead of relying on intuition or anecdotal feedback, teams make decisions grounded in behavioral evidence. This approach delivers measurable competitive advantages:

Data-driven product development: Product managers can prioritize features based on usage data, identify which capabilities drive engagement, and understand which improvements will have the greatest impact on user satisfaction and retention.

Understanding customer journeys: By tracking user behavior from first interaction through long-term engagement, teams identify critical "aha moments" that drive activation, uncover friction points that cause drop-off, and optimize onboarding experiences to improve conversion rates.

Reducing churn through behavioral insights: Early warning signals in product usage patterns help customer success teams intervene before users churn, while cohort analysis reveals which user segments are at highest risk and why.

Personalization at scale: Behavioral segmentation enables personalized experiences, targeted in-product messaging, and feature recommendations that match user needs and maturity levels.

The Product Intelligence Tech Stack

Building comprehensive product intelligence requires integrating data from multiple source systems. The modern tech stack typically includes:

Product analytics platforms like Amplitude, Mixpanel, Pendo, Heap, and FullStory capture granular event data about user interactions, feature usage, and product engagement patterns.

Customer data platforms such as Segment and Rudderstack collect and route event streams across tools, providing a unified tracking layer.

CRM and marketing systems including Salesforce, HubSpot, Marketo, and Intercom contain customer attributes, account information, and communication history.

Cloud data warehouses like Snowflake, BigQuery, Redshift, and Databricks serve as the central repository where all this data converges for transformation and analysis.

Business intelligence tools such as Looker, Tableau, and Metabase enable self-service analytics and dashboard creation for stakeholders across the organization.

The Challenge: Breaking Down Data Silos

Despite having powerful analytics tools, most organizations face significant data fragmentation challenges that prevent them from realizing the full value of product intelligence.

Common Data Fragmentation Problems

Product usage data remains locked in analytics platforms with limited export capabilities. Customer information scatters across CRM systems, marketing automation tools, and support platforms. Financial and operational data lives in separate business applications. This fragmentation creates multiple sources of truth, where different tools report conflicting metrics, making it difficult to answer even simple questions like "What's our real retention rate?"

The accessibility problem compounds these issues. Product analytics tools typically require specialized knowledge, limiting insights to a small subset of technical users. Business stakeholders can't easily correlate product behavior with revenue outcomes. Data scientists lack the flexibility to build custom analyses or predictive models on behavioral data.

The Cost of Fragmented Product Data

These data silos exact real costs on organizations:

Delayed decision-making occurs when analysts spend hours or days manually gathering and reconciling data from multiple systems instead of focusing on analysis and insights.

Incomplete customer views lead to missed opportunities when product teams can't see how usage patterns correlate with expansion revenue, or when customer success can't identify at-risk accounts early enough to intervene.

Resource waste multiplies as organizations maintain multiple expensive tools with overlapping capabilities, and data teams build redundant integrations and transformation logic.

Context-switching overhead slows productivity as analysts jump between tools, copy data between systems, and reconstruct business logic in multiple places.

Data Pipeline Architecture for Product Intelligence

Modern data pipelines solve fragmentation by centralizing product intelligence data in cloud warehouses where it can be transformed and accessed by any tool or stakeholder.

Understanding Modern Pipeline Patterns

The evolution from ETL (Extract-Transform-Load) to ELT (Extract-Load-Transform) represents a fundamental shift in data architecture. Traditional ETL transformed data before loading it into warehouses, requiring specialized transformation servers and complex pre-processing logic. Modern ELT pipelines load raw data first, then transform it inside the warehouse using SQL-based tools.

ELT wins for product intelligence for several reasons. Cloud data warehouses now offer massive scalable compute power, making in-warehouse transformations faster and more cost-effective. Raw data preservation maintains complete historical records without loss of fidelity. Transformation flexibility allows analysts to iterate on business logic without re-extracting data. Version control integration enables teams to track changes to transformation code just like application code.

Core Pipeline Components

A production-grade product intelligence pipeline consists of six key components:

Data ingestion extracts data from product analytics platforms, CRM systems, and business applications using API connectors or native integrations. Tools like Fivetran, Airbyte, and Stitch automate this process.

Data loading lands raw data in cloud warehouses, typically in staging schemas that preserve the source system structure. This creates an immutable audit trail of all historical data.

Data transformation cleans, standardizes, and enriches raw data using SQL-based transformation frameworks like dbt (data build tool). This is where scattered data becomes unified product intelligence.

Data orchestration manages scheduling, dependencies, and execution of pipeline tasks. Modern orchestrators ensure transformations run in the correct order and handle failures gracefully.

Data quality and testing validates that data meets expectations through automated tests, schema validation, and anomaly detection to catch issues before they impact downstream users.

Data activation serves transformed data to business intelligence tools, reverse ETL systems, and operational applications that activate insights.

Building the Pipeline: Practical Framework

Phase 1: Data Source Integration

Start by identifying your critical product intelligence data sources. For most SaaS companies, this includes at minimum: a product analytics platform capturing behavioral events, CRM data with customer and account attributes, and billing/subscription data for revenue correlation.

Modern ELT tools provide pre-built connectors for popular data sources, dramatically reducing integration complexity. When evaluating connectors, prioritize those supporting incremental extraction (syncing only new/changed data), schema change handling (automatically adapting to source system updates), and reliable error handling with automatic retries.

For behavioral event data, pay special attention to event taxonomy design. Standardize event naming conventions across your product, ensure all events include critical context (user ID, timestamp, session ID), and document event definitions and property schemas for future maintainability.

Phase 2: Landing Data in Your Warehouse

Organize raw data in a staging zone that maintains separation from transformed models. Common patterns include prefixing staging schemas with "raw_" or organizing by source system (raw_amplitude, raw_salesforce, etc.).

For time-series product data, implement partitioning strategies based on event timestamp. This dramatically improves query performance and reduces costs by scanning only relevant partitions. Snowflake, BigQuery, and Redshift all offer native partitioning capabilities optimized for different workloads.

Product analytics tools often export nested JSON structures. Your landing process should preserve this structure in native JSON/VARIANT columns, allowing transformation logic to flatten and extract fields as needed. This provides flexibility as your analytics requirements evolve.

Phase 3: Transformation with dbt

dbt has become the de facto standard for SQL-based transformation in modern data stacks. It brings software engineering best practices to analytics: version control, testing, documentation, and modularity.

Structure your dbt project in three layers:

Staging models create a clean interface to raw source data. They handle column renaming, data type casting, basic filtering, and deduplication, but avoid complex business logic. Each source system gets its own staging model that serves as the foundation for downstream transformation.

Intermediate models implement reusable business logic that's too complex for staging but not yet ready for end users. This might include sessionization logic, user identity resolution across devices, or feature usage calculations. Intermediate models remain hidden from end users and serve as building blocks.

Mart models deliver business-ready datasets optimized for specific use cases. Common product intelligence marts include: user dimension tables with enriched attributes, event fact tables with standardized schemas, feature adoption metrics by user segment, conversion funnel datasets, and retention cohort analyses.

Phase 4: Modeling Product Intelligence Data

Product intelligence data requires specialized modeling patterns to handle high-volume behavioral events efficiently.

Event-based modeling treats user interactions as immutable facts. Create a standardized events table that unifies data from multiple analytics platforms, ensuring consistent schema regardless of source. Key fields include event_id, user_id, session_id, timestamp, event_name, and event_properties (stored as JSON for flexibility).

User dimension tables maintain current state and attributes for each user. Implement slowly changing dimensions (typically Type 2) to track how user attributes evolve over time. This enables historical analysis like "What did this user's profile look like when they churned?"

Sessionization groups user events into logical sessions based on time gaps or explicit session identifiers. Standard sessionization logic creates new sessions after 30 minutes of inactivity, though optimal thresholds vary by product type.

Incremental processing for large event datasets is critical for performance and cost. dbt's incremental materialization strategy processes only new events since the last run, dramatically reducing transformation time for tables with billions of rows.

Phase 5: Orchestration and Automation

Modern orchestration moves beyond cron jobs to declarative, dependency-aware scheduling. Tools like Airflow, Prefect, Dagster, and specialized platforms like Paradime's Bolt system manage complex workflows where hundreds of models depend on each other.

Key orchestration capabilities include:

Declarative DAG definitions that express dependencies between models rather than explicit ordering. The orchestrator determines the optimal execution sequence.

State-aware execution for incremental models that tracks what's already been processed and handles failures gracefully.

Parallel execution that runs independent models simultaneously to minimize total pipeline runtime.

Error handling and alerting that notifies the appropriate teams when issues occur and provides context for debugging.

Phase 6: Data Quality and Testing

Production pipelines require comprehensive testing to catch issues before they impact downstream users.

Schema tests validate data structure and types, ensuring critical fields are never null, checking uniqueness constraints on keys, and verifying referential integrity across tables.

Custom business logic tests encode domain knowledge, such as verifying that all events have valid timestamps, checking that user counts never decrease in cumulative tables, and ensuring metric calculations match known benchmarks.

Anomaly detection identifies unexpected patterns through statistical monitoring. Modern data observability tools can alert on sudden volume changes, metric distributions outside normal ranges, and schema evolution that breaks downstream queries.

Column-level lineage tracks how data flows from sources through transformations to final outputs, enabling impact analysis when issues occur and helping teams understand dependencies.

Best Practices for Production-Grade Pipelines

Version Control and CI/CD

Treat transformation code like production application code. Store all dbt models, tests, and documentation in Git repositories with clear branching strategies. Implement pull request reviews where team members validate logic before merging. Run automated tests in CI environments to catch breaking changes before deployment.

Performance Optimization

Query optimization becomes critical as event volumes scale. Leverage incremental materializations for large fact tables, use views for lightweight transformations that don't justify storage costs, and implement clustering and partitioning based on common query patterns.

Monitor warehouse costs continuously and attribute spending to specific models or teams. This visibility enables informed decisions about which optimizations deliver the highest ROI.

Observability and Monitoring

Comprehensive observability requires tracking pipeline execution status, data freshness, data quality metrics, and query performance. Set up alerts to Slack, PagerDuty, or other notification systems when issues occur.

Lineage visualization shows how data flows from sources through transformations to dashboards, enabling impact analysis and helping teams understand the blast radius of potential changes.

Documentation and Governance

Maintain living documentation that evolves with your pipelines. dbt's built-in documentation system generates searchable catalogs of all models, columns, and tests. Supplement with business glossaries that define metrics consistently across the organization.

Implement access controls and security policies appropriate for your data sensitivity. Ensure compliance with privacy regulations like GDPR and CCPA through appropriate data retention and anonymization strategies.

Common Use Cases and Analytics Patterns

User Engagement Analysis

Calculate daily, weekly, and monthly active user metrics segmented by cohort, geography, or user attributes. Track feature adoption rates to understand which capabilities drive engagement. Analyze session depth and frequency patterns to identify power users versus casual users.

Conversion Funnel Optimization

Model multi-step conversion funnels from signup through activation to paid conversion. Identify drop-off points and bottlenecks through cohort analysis. Attribute conversion improvements to specific product changes or experiments.

Retention and Churn Analytics

Build cohort retention curves that track how user engagement evolves over time. Develop churn prediction models that identify at-risk users before they leave. Create win-back campaign targeting based on past behavior patterns. Calculate customer lifetime value for different segments to inform acquisition spending.

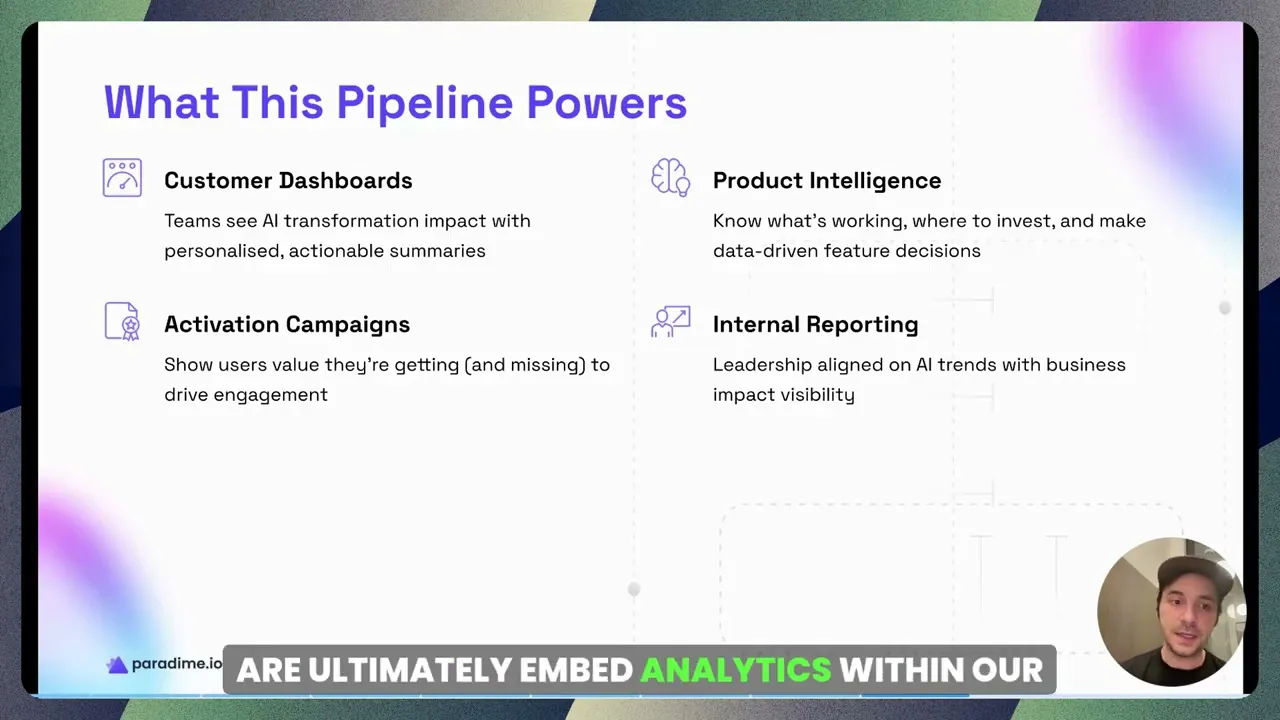

Product Analytics Dashboards

Enable self-service analytics through curated datasets and semantic layers. Build executive KPI dashboards that track north star metrics. Empower product managers with feature-level analytics. Provide customer success teams with health scores based on product engagement.

Modern Solutions: Eliminating Tool Fragmentation

Traditional analytics stacks require stitching together separate tools for development, orchestration, monitoring, and documentation. This fragmentation creates context-switching overhead and integration complexity.

Modern unified platforms consolidate the entire analytics workflow into single workspaces. Paradime exemplifies this approach as an AI-powered platform that acts as 'Cursor for Data,' eliminating tool sprawl through integration of development, orchestration, and observability.

The Code IDE features DinoAI co-pilot for SQL generation, documentation, and debugging assistance—dramatically accelerating development cycles. The Bolt orchestration system provides declarative scheduling with dependency management and state-aware execution. Radar monitoring delivers column-level lineage, anomaly detection, and real-time alerts to PagerDuty, DataDog, and Slack.

Companies like Tide, Customer.io, and Emma have achieved 50-83% productivity improvements by consolidating their analytics workflows in Paradime. Development cycles accelerate 10x through AI assistance and integrated testing. Warehouse costs drop 20%+ through optimization insights and automated performance monitoring. Teams achieve production reliability at scale, running hundreds of models hourly with full observability.

Conclusion

Building data pipelines for product intelligence transforms scattered data into competitive advantage. By consolidating product analytics, customer data, and operational metrics in cloud warehouses, organizations gain complete visibility into user behavior and business outcomes.

The modern ELT approach with dbt transformations enables scalable, maintainable pipelines that evolve with business needs. Comprehensive testing, orchestration, and monitoring ensure production reliability. Unified platforms eliminate tool fragmentation and accelerate time-to-insight.

Start by auditing your current product intelligence sources and identifying critical data gaps. Choose a cloud data warehouse that fits your scale and budget. Implement core transformation patterns with dbt, focusing on staging, intermediate, and mart layers. Establish orchestration and monitoring to ensure reliability. Iterate and scale based on business needs and user feedback.

The investment in robust product intelligence pipelines pays dividends through faster decision-making, improved product outcomes, and competitive differentiation in increasingly data-driven markets.