🦖 DinoAI - Build Faster, and Spend Less

Discover how DinoAI's new .dinoprompts, Credit Saver Mode, and Voice-to-Text features are revolutionizing analytics engineering productivity while reducing AI costs. From reusable prompt libraries to intelligent context management, see how teams can work faster and spend smarter.

Parker Rogers

Jun 26, 2025

·

6 min read

min read

Data teams face a constant balancing act: deliver faster while managing costs. Traditional AI tools often force you to choose between speed and efficiency, leaving teams either moving slowly or burning through credits at an unsustainable pace. But what if you didn't have to make that trade-off?

In our eighth DinoAI livestream, we unveiled groundbreaking features that fundamentally change this equation. From reusable prompt libraries to intelligent cost optimization, these innovations represent a new standard in AI-assisted dbt™ development—one where increased productivity actually reduces costs.

The Repetition Problem: Most Data Teams Waste Time with AI

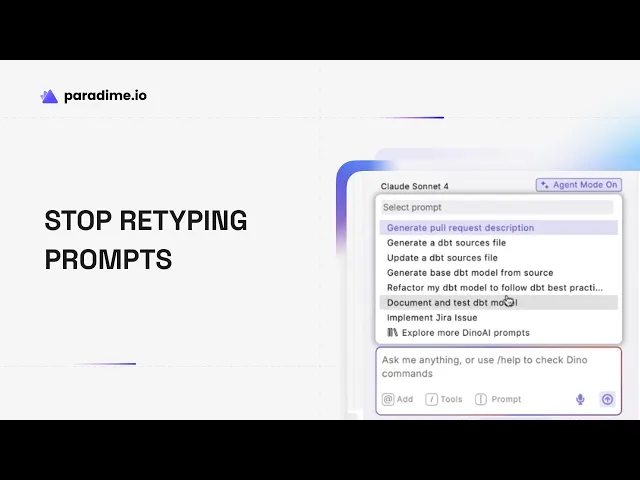

Every analytics engineer knows the frustration: you've crafted the perfect prompt for updating source files, only to find yourself retyping variations of it dozens of times. This repetitive cycle doesn't just waste time—it introduces inconsistency and reduces the quality of AI interactions across your team.

"One of the problems or challenges we saw with prompts in general is that we often tend to repeat ourselves, writing the same thing on and on and on within the chat input," explains Fabio (co-founder, Paradime) during the demonstration. This observation led to the development of DinoAI's most requested feature: .dinoprompts.

Introducing .dinoprompts: Your Team's AI Prompt Library

The solution to prompt repetition isn't just automation—it's standardization. DinoAI's new .dinoprompts feature allows teams to create, share, and reuse sophisticated prompt libraries that maintain consistency while dramatically reducing setup time.

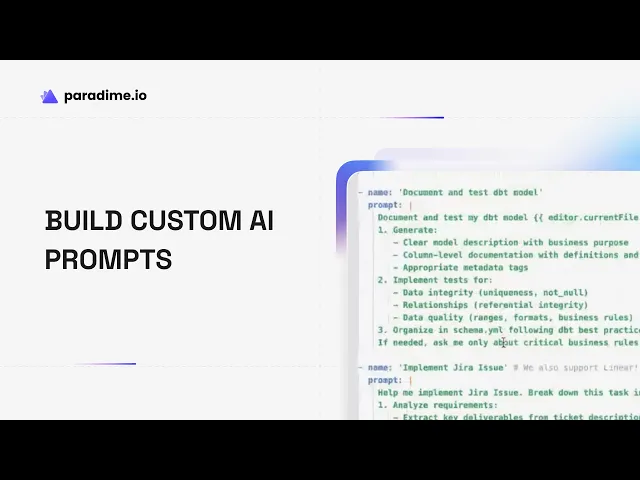

Similar to .dinorules, .dinoprompts exists as a YAML file in your repository, making it version-controlled and collaborative. Out of the box, DinoAI provides essential prompts for common tasks like updating sources.yml files, generating documentation, and creating tests. But the real power emerges when teams customize these prompts for their specific workflows.

Variables: Context-Aware Automation

What sets .dinoprompts apart from simple text templates is its sophisticated variable system. Using familiar Jinja syntax, prompts can dynamically incorporate context from your development environment:

{{editor.currentFile.path}}automatically includes the file you're currently editing{{git.diff.withOriginDefaultBranch}}captures changes for pull request descriptionsAdditional variables continue to expand the system's contextual awareness

This means a single prompt for "document and test dbt™ model" automatically knows which model you're working on, analyzes its structure, and generates appropriate documentation and tests—all without requiring you to specify the file path or copy-paste code.

Beyond Text: Visual Documentation with Mermaid Integration

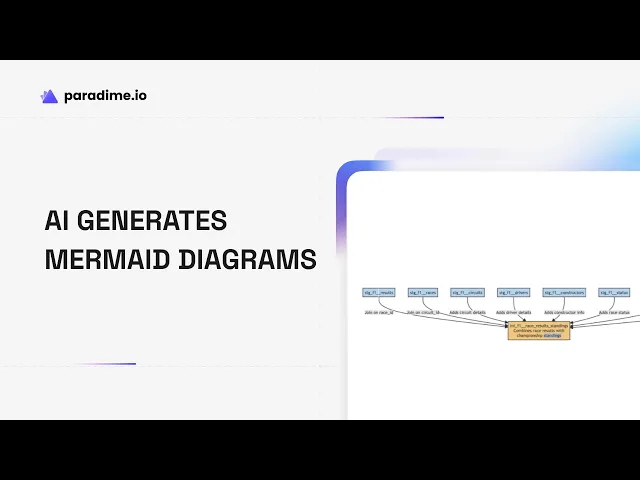

Analytics engineering increasingly requires visual communication, especially when explaining complex data lineage to stakeholders. DinoAI's .dinoprompts seamlessly integrate with Paradime's built-in Mermaid diagram support, allowing teams to generate visual documentation as easily as they create SQL models.

A custom prompt can analyze your dbt model's dependencies and relationships, then automatically generate a Mermaid diagram that shows data flow, source connections, and transformation logic. The diagram renders directly in Paradime's interface, creating visual artifacts that make complex models accessible to both technical and business audiences.

Credit Saver Mode: Intelligence That Reduces Costs

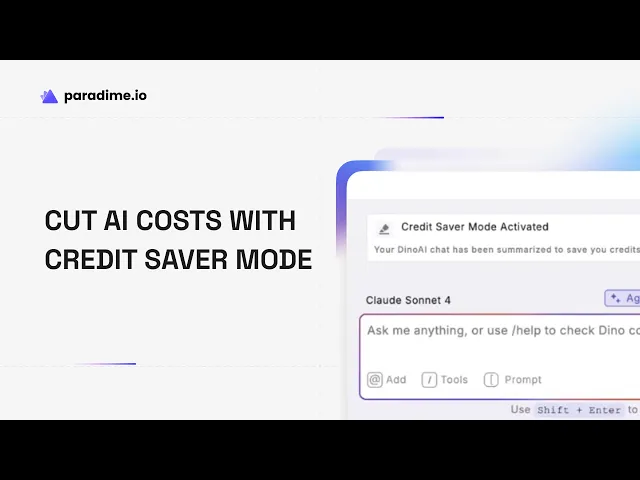

Perhaps the most innovative feature unveiled in this livestream addresses a universal concern with AI tools: escalating costs. Traditional AI assistants maintain full conversation context indefinitely, leading to exponentially growing token usage as conversations develop.

DinoAI's Credit Saver Mode represents a fundamentally different approach. The system intelligently recognizes when you're transitioning between tasks and automatically summarizes previous context rather than maintaining full token history. This isn't just compression—it's intelligent context management that preserves essential information while dramatically reducing computational overhead.

"Users don't have to think about this anymore," notes Fabio. "They can just simply do the work and the agent will take care of being efficient." This hands-off optimization allows teams to generate more code, documentation, and analysis while actually spending less on AI credits.

How Credit Saver Mode Works in Practice

When you're documenting multiple models in sequence, DinoAI recognizes that the full context of the first model isn't necessary for documenting the third. Instead of carrying forward thousands of tokens, it maintains a concise summary that preserves workflow continuity without the cost burden.

This intelligent optimization becomes particularly valuable for teams working with large SQL files or complex model hierarchies, where traditional AI tools would quickly become prohibitively expensive.

Voice-to-Text: Natural AI Interaction

The evolution of AI interaction has progressed from typing every detail to using standardized prompts, and now to natural conversation. DinoAI's voice-to-text capability allows teams to literally talk through their requirements, making AI assistance feel more collaborative and intuitive.

This feature proves especially valuable for complex business logic explanations or brainstorming sessions. Instead of struggling to type out intricate domain knowledge, analytics engineers can speak naturally about their models, explaining the reasoning behind specific transformations or business rules. DinoAI captures this context and incorporates it into generated documentation or code comments.

Pull Request Automation: Closing the dbt™ Development Loop

Effective analytics engineering requires clear communication about changes, especially in collaborative environments. DinoAI's automated pull request generation leverages the {{git.diff.withOriginDefaultBranch}} variable to analyze your changes and create comprehensive descriptions that include:

Summary of modifications

Motivation behind changes

Testing recommendations

Impact assessment

This automation ensures that every pull request contains the context necessary for efficient code review, reducing back-and-forth communication and accelerating deployment cycles.

The Compound Effect: Faster dbt™ Development, Lower Costs

What makes these features revolutionary isn't just their individual capabilities—it's how they work together to create a compound effect. Standardized prompts reduce setup time, intelligent context management reduces costs, voice interaction captures nuanced requirements, and automated documentation maintains quality standards.

The result is a development environment where teams naturally become more productive while spending less on AI infrastructure. This inverts the traditional trade-off between speed and cost, creating a sustainable path for AI-assisted analytics engineering.

Ready to Build Faster and Spend Smarter?

The features demonstrated in this livestream are available now to all DinoAI users. From standardized prompt libraries to intelligent cost optimization, these capabilities provide immediate value for analytics engineering teams looking to work more efficiently.

Explore how DinoAI's latest features can transform your workflow today….for free!